STACKIT AI Model Serving is the STACKIT service for cloud-hosted LLMs. It is an easy-to-use service that exposes Large Language Models through OpenAI API compatible endpoints. At the time of writing, there are 5 models available, 4 of which are chat models and one is best used for embeddings.

Available models at STACKIT AI Model Serving

| Model | Description |

| cortecs/Llama-3.3-70B-Instruct-FP8-Dynamic | Llama 3.3 is an instruction-tuned generative language model optimized for multilingual dialogue use cases. It was released by Meta AI on December 6, 2024, and utilizes an advanced transformer architecture and is designed to align with human preferences for helpfulness and safety through supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF). The knowledge cutoff for this 70B model is December 31, 2023. |

| google/gemma-3-27b-it | The Gemma-3-27B-IT model is a multilingual large language model with 27 billion parameters, specifically fine-tuned for Italian-centric tasks. Built on cutting-edge transformer architecture, it excels in natural language understanding and generation for Italian, while retaining solid multilingual capabilities. Designed to handle diverse tasks such as text summarization, question answering, translation, and content creation, it caters specifically to the linguistic nuances, grammar, and cultural context of Italian. The model is optimized for high performance while ensuring scalability, making it suitable for various research, commercial, and educational applications where Italian language support is a priority. Additionally, as part of the Gemma series, it’s likely fine-tuned on instruction-based datasets, allowing it to follow user prompts effectively and generate accurate, context-aware responses. |

| neuralmagic/Mistral-Nemo-Instruct-2407-FP8 | The Mistral-Nemo-Instruct-2407 model, developed collaboratively by Mistral AI and NVIDIA, is an instruction-tuned LLM released in 2024. Designed for multilingual applications, it excels in tasks such as conversational dialogue, code generation, and instructional comprehension across various languages. |

| neuralmagic/Meta-Llama-3.1-8B-Instruct-FP8 | Llama 3.1 (8B parameters version) is an auto-regressive language model that uses an optimized transformer architecture. It was released by Meta AI on July 23, 2024, and utilizes supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF) to align with human preferences for helpfulness and safety. The knowledge cutoff for this model is December 31, 2023. |

| intfloat/e5-mistral-7b-instruct | The E5-Mistral-7B-Instruct model is designed to offer high-quality performance equivalent to larger instruction-tuned models while being lightweight and efficient. Its performance depends on the base quality of Mistral 7B, which is widely regarded as a highly competitive alternative to proprietary models. |

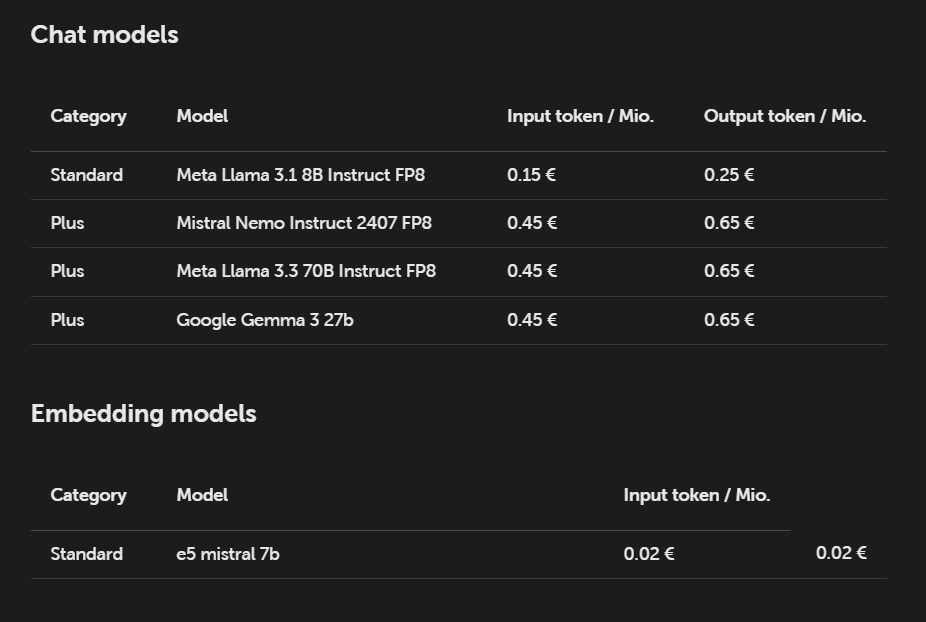

AI Model Serving Pricing

The pricing is simple with two categories. Most models cost 0,45€ per million of input tokens and 0,65€ per million of output tokens. Only Llama 3.1 costs less.

The embedding model e5-mistral-7b costs 0,02€ per million of input tokens.

Taking Llama 3.3 70B as a benchmark, STACKIT AI Model Serving offers very competitive pricing compared to OVHcloud AI Endpoints and other cloud vendors. Only Azure is cheaper. All other vendors are charging more per token, including AWS.

| Vendor | Model | Input Tokens €/1M | Output Tokens €/1M |

|---|---|---|---|

IONOS AI Model Hub | Llama 3.3 70B Instruct | 0,65 € | 0,65 € |

Scaleway Generative APIs | Llama 3.3 70B Instruct | 0,90 € | 0,90 € |

OVHcloud AI Endpoints | Llama 3.3 70B Instruct | 0,79 € | 0,79 € |

AWS Bedrock | Llama 3.3 70B Instruct | 0,72 € | 0,72 € |

STACKIT AI Model Serving | Llama 3.3 70B Instruct | 0,45 € | 0,65 € |

Azure AI | Llama 3.3 70B Instruct | 0,268 € | 0,354 € |

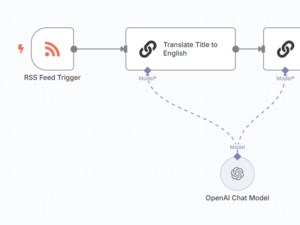

Use STACKIT AI Model Serving LLMs with OpenAI API

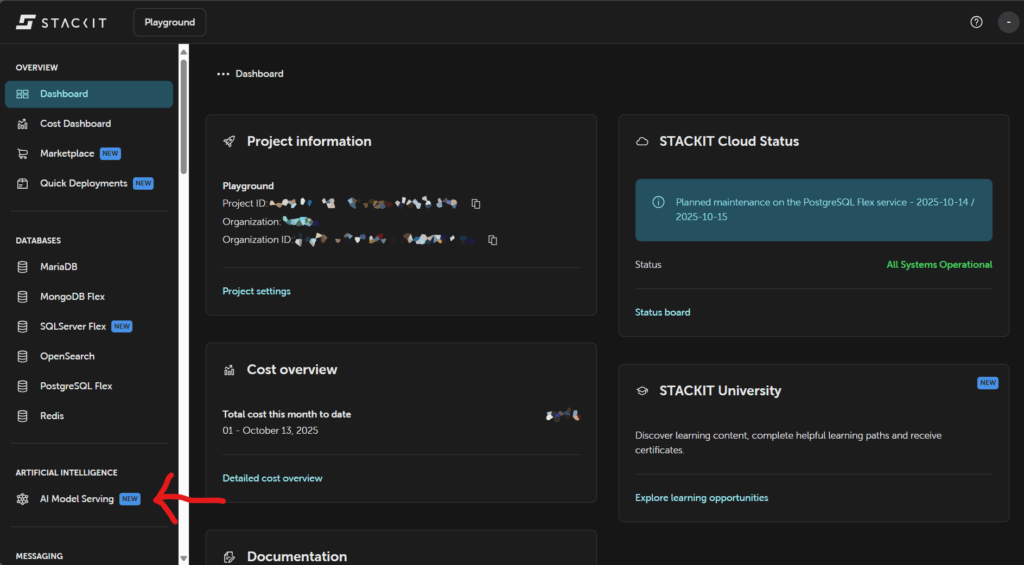

Using the models with STACKIT AI Model Serving is straightforward and easy. In the STACKIT console, navigate to AI Model Serving.

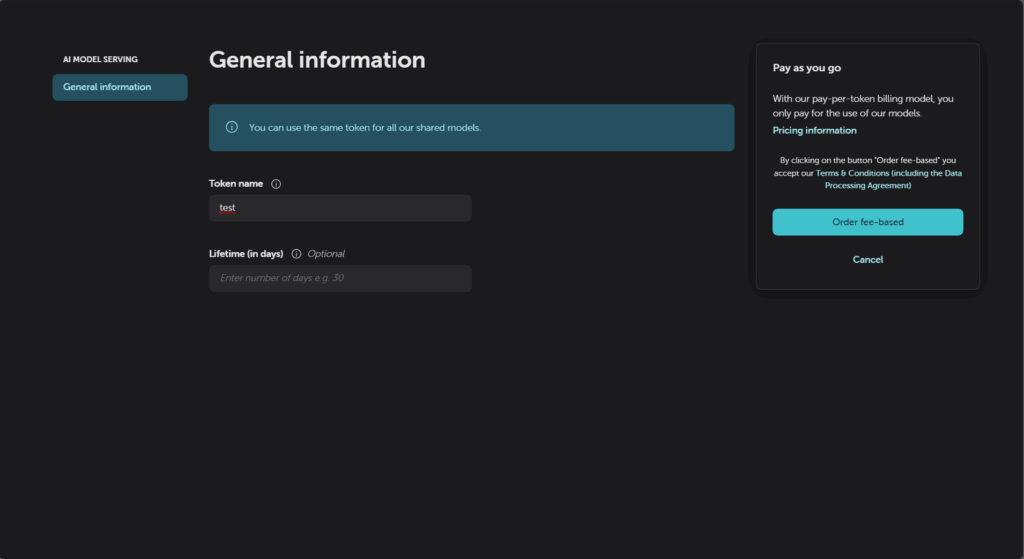

In the AI Model Serving section, create a token. To create a token, a name and the token lifetime must be specified.

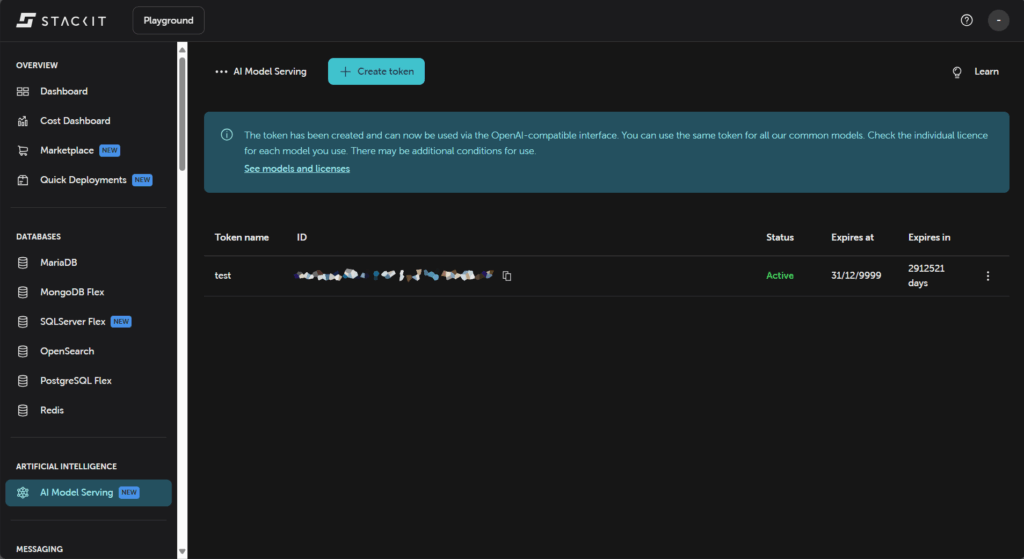

Once the token is created it can be used with all STACKIT AI Model Serving models via an OpenAI-compatible interface.

Outlook

STACKIT AI Model Serving is as easy to use as it gets and has very competitive pricing. I hope that the available models are just the start and that more models are following soon. One model that I am interested in and that I would like to see hosted is the Swiss model Apertus.